Amazon Web Services (AWS) offers well known resources for anyone looking for cheap (if not free) computing power or storage. They offer a very flexible solution allowing to get started in minutes and in this post, I’ll describe a way to use S3 (Simple Storage Service) in order to take off-site backup of your data.

The process is quite simple but the following assumes that your original data is stored on a Linux server. If not, some of the steps below will slightly differ but the overall process should remain similar nonetheless.

Create Account and Setup S3

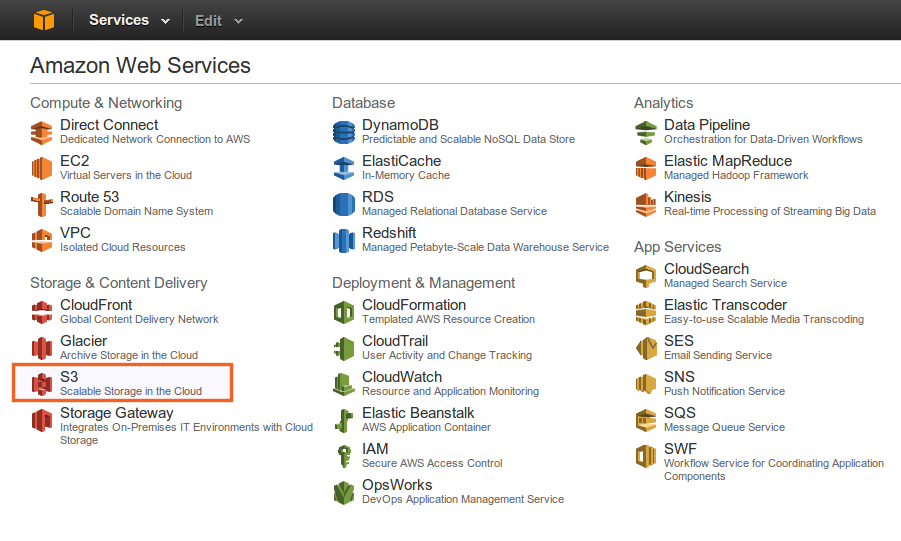

First things first, it is necessary to sign up or login to AWS. Once logged in, simply access the console and click on the S3 service link.

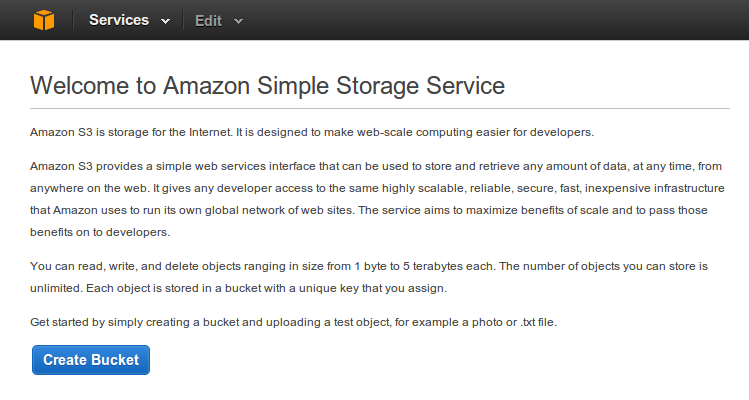

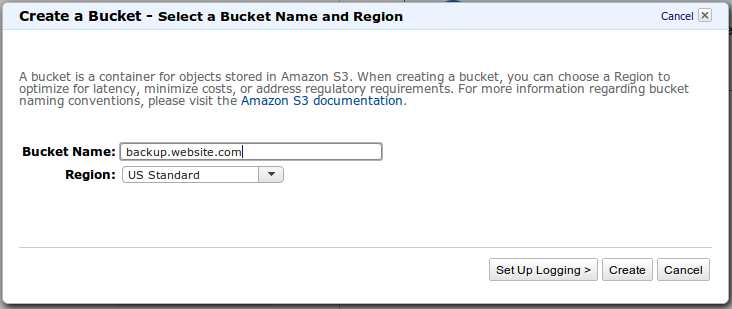

The first time you access it, you’ll be invited to create a new bucket. Think of a bucket as a set of data that you want to save. For instance, if you are managing multiple web sites, it is a good idea to create one bucket for each site (and not one big global bucket).

So to get started, simply create a new bucket to add it to the list. You can decide to enable logging already but this can be done at a later stage as well so you can skip it for now.

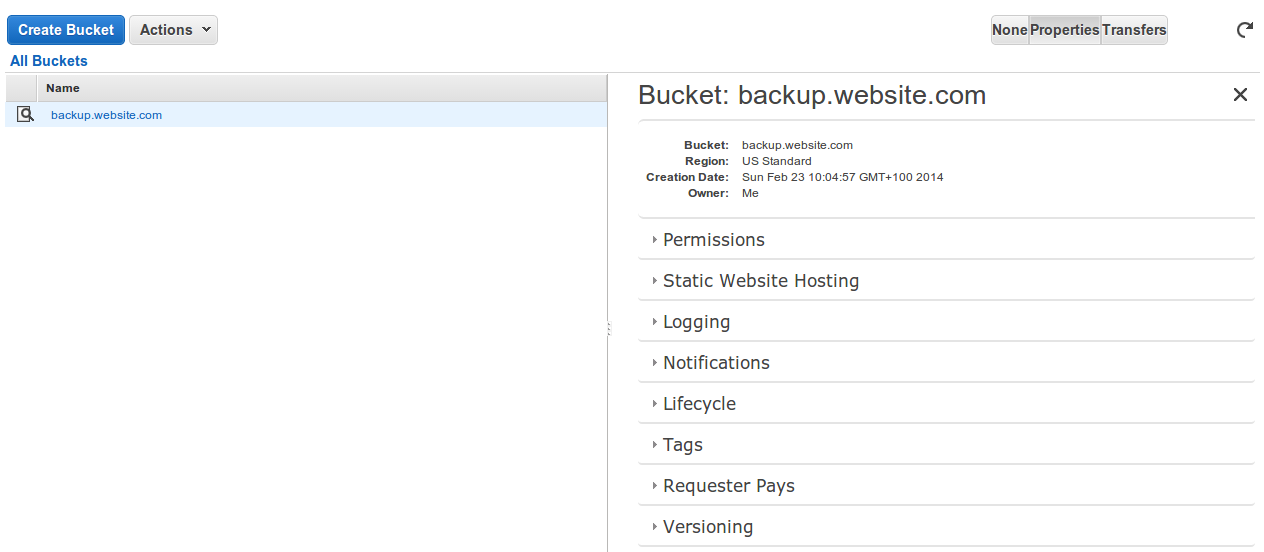

Once the bucket is created, you’ll be redirected to the bucket list and will have access to the properties on the right side of the screen.

From there, an important aspect is to set up user permissions so that you can control who can access this bucket.

Set up User Permission

Amazon highly recommends to enable I&AM (Identity and Access Management) on your account. So, to make it simple, we’ll just create a new group and a new user that has only permissions to manipulate the bucket that we have just created. This way, if anything happens, only this bucket will be impacted.

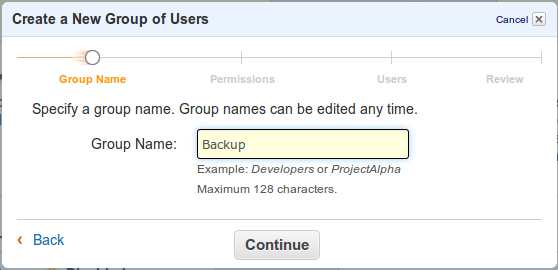

Create a New Group

Specify a name for the new group as instructed in the modal and continue to the next step.

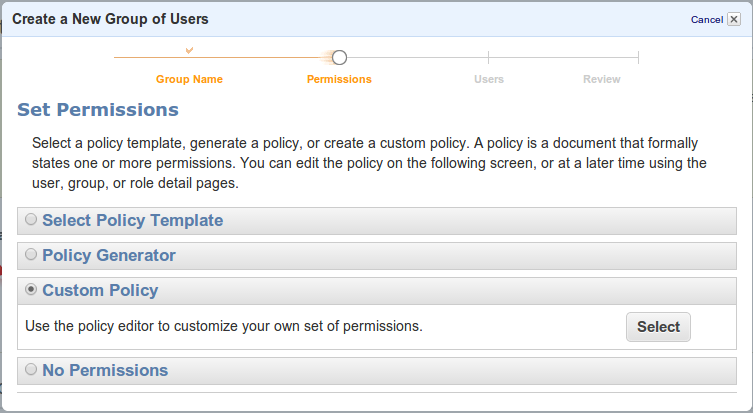

Set Custom Policies to the Group

Since we want to ensure that only a specific bucket can be accessed, we’ll define policies accordingly. This will require to define a custom policy.

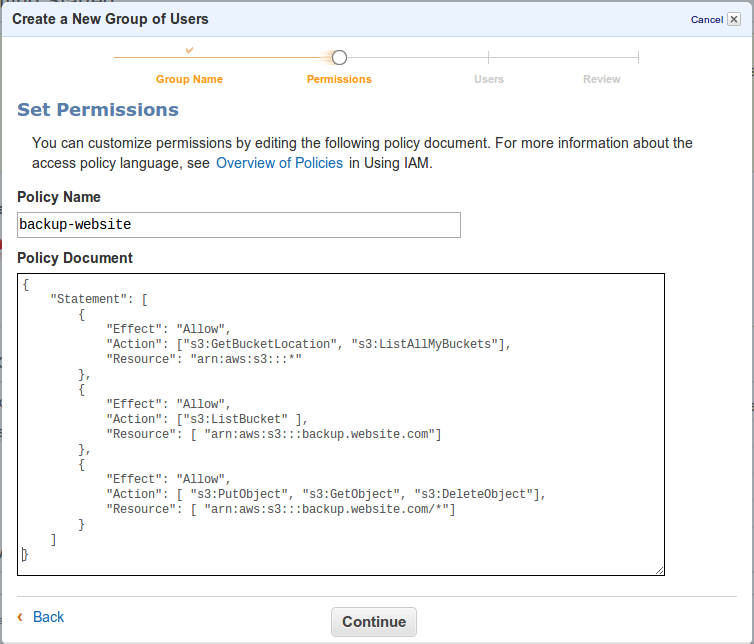

Enter the following custom policy that will allow to access only the bucket that you just have created. Make sure to update the name of the bucket (called “Resource” in the last two entries below) to reflect what you have set up above.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

{

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:GetBucketLocation", "s3:ListAllMyBuckets"],

"Resource": "arn:aws:s3:::*"

},

{

"Effect": "Allow",

"Action": ["s3:ListBucket" ],

"Resource": [ "arn:aws:s3:::backup.website.com"]

},

{

"Effect": "Allow",

"Action": [ "s3:PutObject", "s3:GetObject", "s3:DeleteObject"],

"Resource": [ "arn:aws:s3:::backup.website.com/*"]

}

]

}

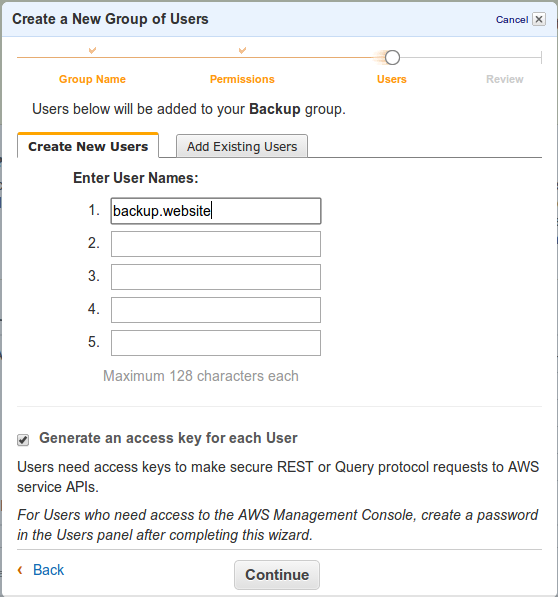

Create a New User to Add to This Group

Since no users were previously defined, we’ll add one to the new group and generate an access key for him.

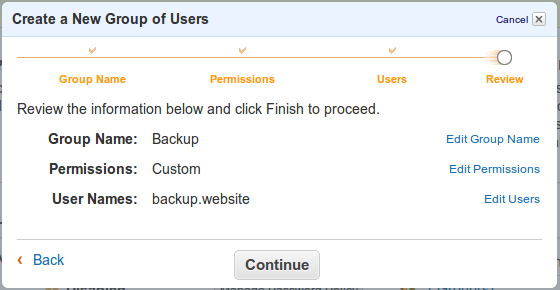

Confirm Changes

Confirm the changes to create the group, the user and the new policy.

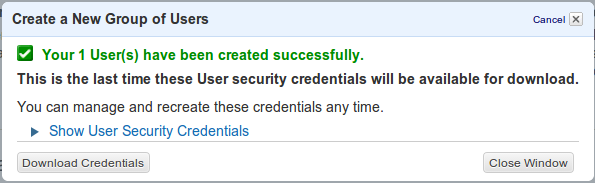

Save Credentials

Save the new user credentials. This will be used later when setting up the host so make sure that you do not lose them otherwise you’ll need to generate new ones.

Everything is now set up on AWS side to receive the backups. Let’s move to the server and create the necessary script to ensure backups are sent automatically to S3.

Set up Server Side Script

Install s3cmd and s3fs

On the server that will send backups to AWS, we’ll need to install a couple of tools to facilitate the work. The first one, s3cmd, is available in Ubuntu repositories while the second one, s3fs, will be compiled from source. Installation of s3fs is based on the official installation notes.

1

2

3

4

5

6

7

sudo apt-get install s3cmd

sudo apt-get install build-essential libcurl4-openssl-dev libxml2-dev libf

wget https://s3fs.googlecode.com/files/s3fs-1.74.tar.gz

tar -xvzf s3fs-1.74.tar.gz

cd s3fs-1.74/

./configure --prefix=/usr

sudo make install

Create S3 Folder

To easily access files from S3, we’ll create a specific folder that will be mounted when required.

1

2

sudo mkdir /mnt/s3

sudo chown userXYZ:userXYZ /mnt/s3

Make sure to update the second line in the code sample here above with the correct user with whom you connect to the server (userXYZ).

Connect to AWS

In order to be able to mount the folder, we’ll need to connect to AWS with the user that was created previously. To do so automatically, we’ll set up a configuration file containing the user credentials as instructed on s3fs documentation.

1

2

echo "xyz:tuv" > ~/.passwd-s3fs

chmod 600 ~/.passwd-s3fs

Make sure to replace xyz:tuv with the correct credentials that you obtained from AWS. The permissions of the file should be updated to 600 so that it is only accessible from your user account on the server.

Backup Script

Now that everything is in place, we can create the script that will take care of mounting the s3 folder and synchronize data between the server and AWS. The script below is largely inspired by previous work from John Eberly and Idan. Save it as sync.sh.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

#!/bin/sh

# Redirect the stdout and stderr to the log file

exec 1> /home/userXYZ/backups/logs/s3.log 2>&1

# Register information about the date at which the script was run

echo `date`

echo "Synchronize backup files from website.com to S3 bucket"

# Mount s3 file system using secure URL

echo "Mount S3 file system"

/usr/bin/s3fs -o url=https://s3.amazonaws.com backup.website.com /mnt/s3

# Run the sync for files backed up by the server itself

echo "Sync local backups with S3"

# rsync -nvaz /home/userXYZ/backups/files/ /mnt/s3 # dry-run if necessary

rsync -vaz /home/userXYZ/backups/files/ /mnt/s3

echo "Backup completed"

echo "Unmount S3 file system"

# Unmount s3 file system once backup is performed

fusermount -u /mnt/s3

Run the script

To run the script, make sure that this is executable first and then run it.

1

2

chmod +x sync.sh

./sync.sh

Conclusion

After this is completed, you can easily schedule backups so that this is run in a timely manner. By setting up a cron job, it is for instance possible to ensure that files are sent daily to AWS, making sure that your off-site backups are properly saved.

1

45 0 * * * /home/dsight_web/backups/scripts/sync.sh

Annexe 1 - s3cmd

When s3cmd is installed, it is also possible to use it directly to send files individually to AWS. To see the full list of available commands, have a look at the documentation.

Annexe 2 - Amazon Free Tier

AWS S3 comes with a free tier. This is not only related to the disk space available but also to the commands that are run. For instance, there is a free number of GET and PUT requests that can be run each month. Those requests are typically made when adding new files, retrieving list of files or fetching files on AWS. The backup script presented in this article is not particularly friendly in terms of requests that are made to AWS. You might therefore end up having to pay a few cents a month if you use the script as-is.

A couple of possible improvements could be:

- do not mount and unmount the s3 folder on the server whenever the backup script runs. Instead, keep it mounted to limit the number of list / get requests that are made.

- do not use rsync to synchronize the files. Instead simply send the new files to backup.

And to avoid any bad surprise, set up your account to send alerts regularly based on the amount that you’ll end up paying. This can be done from your Amazon preferences by enabling Billing Alerts.

For the time being, comments are managed by Disqus, a third-party library. I will eventually replace it with another solution, but the timeline is unclear. Considering the amount of data being loaded, if you would like to view comments or post a comment, click on the button below. For more information about why you see this button, take a look at the following article.